The Beginning of the Era of Deep Learning

We, humans, are complex but love to simplify stuff in our own terms. When we talk about Action films, many would take the example of “The Fight Club” or “Police Story” to visualize how an action film would be. If the topic is Sci-Fi, people would probably remember “The Terminator” or “iRobot”. Why? Because we love examples. We learn from examples. Right from taking our parents, teachers and elders as our role models, we have learned each and everything in this world with the aid of examples.

Similar to us, Machines have started learning more sophisticated stuff when we define an example. They can analyze the behavior pattern of humans and get them to make winning decisions with that. They can delve deeper and understand what the core techniques of the particular task allocated to them are all about. That’s called Deep learning, in layman’s terms. Wondering why we explained about the activities and way of functioning of human counterparts? Well, Deep Learning methods are on the basis of human neurons along with artificial neural networks.

Deep Learning: The Fusion of Machine Learning and Artificial Intelligence

Getting into the definition of Deep Learning from Investopedia, Deep learning is nothing but an artificial intelligence function that is quite similar to how the human brain processes the data and creates patterns that we can use in decision making.  Deep Learning is also a subset of Machine learning and Artificial intelligence. Jeff Dean, the mastermind behind the adoption and scaling of deep learning within Google and a part of the core team behind Tensorflow, spoke about what his perspective of Deep Learning is.

Deep Learning is also a subset of Machine learning and Artificial intelligence. Jeff Dean, the mastermind behind the adoption and scaling of deep learning within Google and a part of the core team behind Tensorflow, spoke about what his perspective of Deep Learning is.

He says: When you hear the term deep learning, just think of a large deep neural net. Deep refers to the number of layers typically and so this kind of the popular term that’s been adopted in the press. I think of them as deep neural networks generally. Deep Learning is a subset of machine learning that involves algorithms under the inspiration of the brain structure and function known as artificial neural networks.

Do you know how Deep learning works? Deep Learning methods form a union with machine learning. It would allow everyone to train your AI to predict different kinds of outputs. It is involved in training AI to predict different outputs when they provide you with a specific input. We can train the AI using both unsupervised and supervised learning.

Using Back Propagation:

Backprop is derived as a method for computing those partial derivatives of different functions. It holds the form as a function composition when you are speaking about Neural Nets. While solving an optimization issue involving gradient-related methodologies, you would be able to compute different function gradient belonging to each iteration. In the case of Neural Networks, every objective function consists of a specific composition form.

Computing the gradient consists of 2 ways including Analytic differentiation and Approximate differentiation with the help of finite differences. This method is extremely expensive since the number of function evaluations is O(N) where N would be the total parameters. This method is known to be quite cost-consuming in comparison to analytic differentiation. Using Finite difference, we can validate different back-prop implementations while you are debugging.

Leveraging Learning Rate Decay:

The learning rate is nothing but a hyperparameter used to control how many changes the model has to make while responding to the estimated error each time you update your model weights. Deep learning itself runs on stochastic gradient descent algorithm. When you adapt a learning rate meant for stochastic gradient descent optimization methods, you can hike up your performance while dipping down your training time.

Sometimes you call it adaptive learning rates or learning rate annealing. This could be the simplest and widely used adaptation involving learning rates while you are training. These consist of the benefit of making bigger changes when you are at the starting point of the training while using larger learning rate values. This can decrease your learning rate in such a manner that it shrinks. We make smaller training updates in the latter part of the training procedure.

Get access to Fully Connected Neural Networks:

The interconnected Feedforward Neural Networks are nothing but standard network architecture we leverage in the majority of the neural network applications. When we say completely connected, every neuron would get connected in different layers. Entirely connected would mean that each and every neuron in the layer existing before another layer should be connected to every neuron present in the subsequent layer. Every neuron holds an activation function that would change the neuron’s output when we give the input. These activation functions would consist of:

Linear function:

This is a straight line with the intention to multiply the input with the help of a constant value.

Non-Linear Function:

Sigmoid function, Hyperbolic tangent (tanH) function, and Rectified linear unit(ReLU) function.

Every activation function consists of its own advantages and disadvantages. It is a possibility for us to move along with a deep neural network. This way, we can create various networks. We would be able to create different networks using various inputs, outputs, hidden layers, activation functions, and neurons. This combination is going to allow us to increase the existing complexity along with the power required to train your neural network.

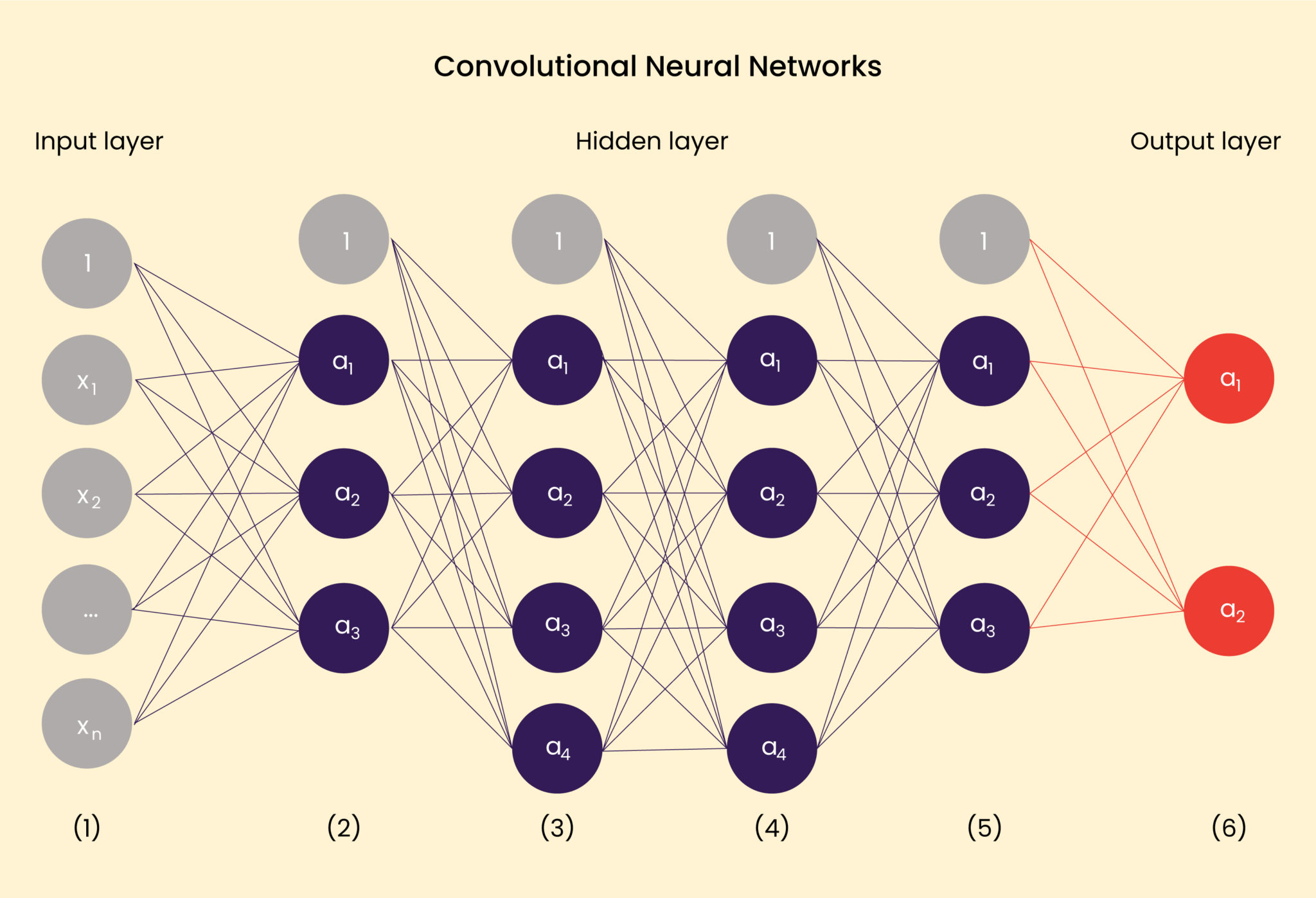

Get to know about Convolutional Neural Networks:

Convolutional Neural Networks is a new kind of deep neural network architecture that is used to tackle different tasks such as image classification. The neuron organization inspires CNNs that are present in the animal brain’s visual cortex.  They are going to provide many new exciting features. These can be useful while you process different types of data such as audio, video and images. CNN holds the gateway to the input layer. While you are dealing with basic image processing, the input would depend typically on a 2-dimensional neuron array corresponding to the image pixel.

They are going to provide many new exciting features. These can be useful while you process different types of data such as audio, video and images. CNN holds the gateway to the input layer. While you are dealing with basic image processing, the input would depend typically on a 2-dimensional neuron array corresponding to the image pixel.

Each neuron present in the convolution layer would hold the responsibilities of a small number of neurons in the upcoming layer. It holds kernels or filters determining the neuron clusters. CNNs is going to work wonders for different image recognition, image segmentation, image processing, and natural language processing. If you are using Convolutional Neural Networks, then you have to know which application it suits well and why you are focusing on that application. Only then would you be able to get the best results? This is going to let you make bigger changes in the market and bring about a galore of glory to your business.

A Deep Dive into Stochastic Gradient Descent:

Stochastic gradient descent (often abbreviated SGD) is nothing but an iterative method that involves the optimization of different objective functions. It consists of suitable smoothness properties. It is in regards to stochastic approximation belonging to the gradient descent optimization, The most innovative way to think of the river path as the one that originates from the mountaintop. If you are going to shape the mountain terrain in a way that the river need not stop anywhere else, then nothing else can stop it from reaching its destination.

While we speak about Machine Learning, we would have got the solution’s global minimum (or optimum) while starting from the initial point of the terrain. The terrain might get trapped and stagnate easily. These are the kinds of terrains that are considered to be a boon in Machine Learning. Stochastic gradient descent is quite popular in terms of training different models such as logistic regression such as Vowpal Wabbit, support vector machines along with graphical models.

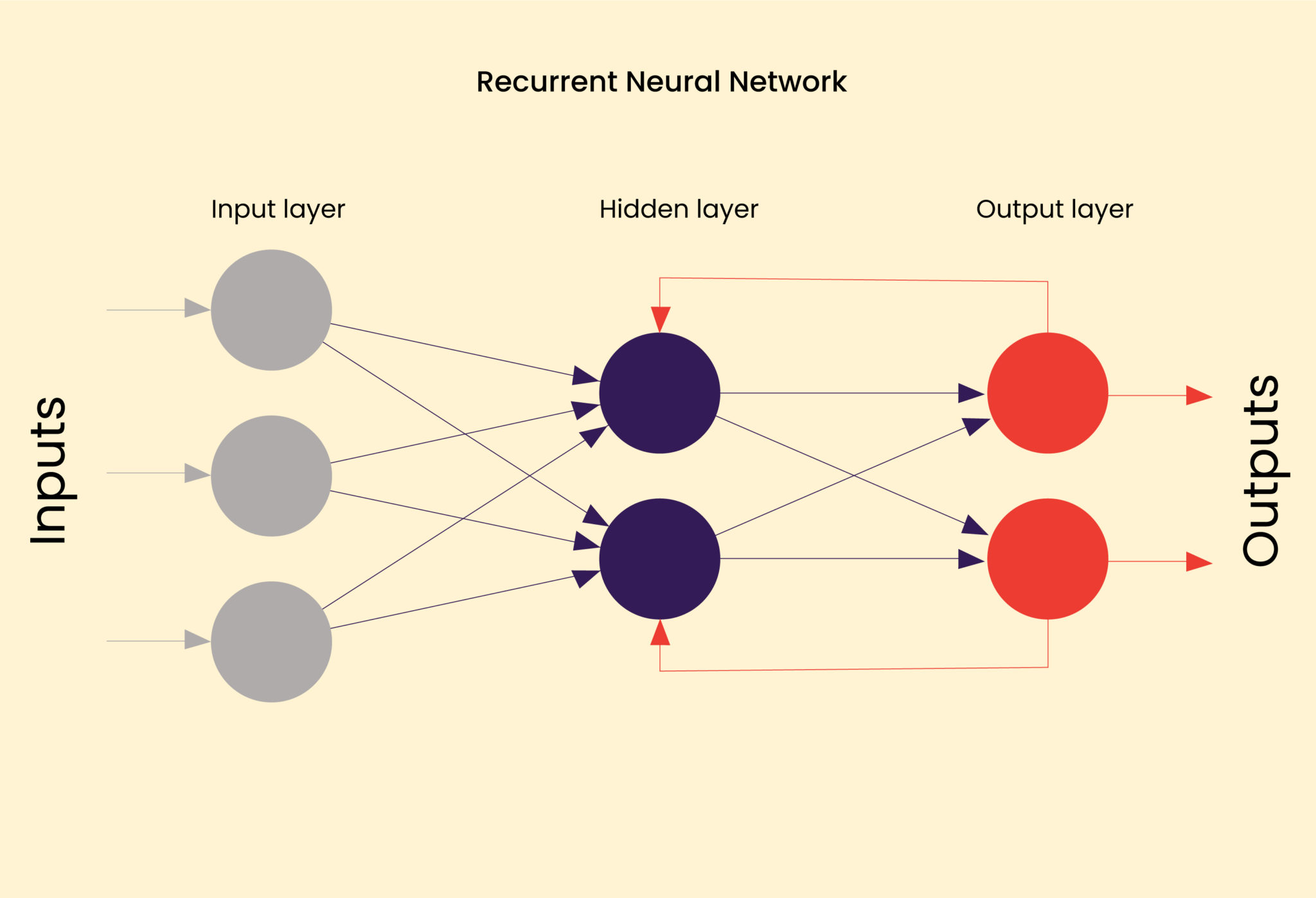

Let’s talk about Recurrent Neural Network:

The recurrent neural network is not similar to feedforward neural networks. It has the ability to operate entirely on the basis of data consisting of the variable input length. RNNs would make the best out of the information it gets from its previous condition  . It can be taken as an input meant for its prediction at the moment. We would be able to repeat this process meant for many arbitrary steps that allow the network to propagate the data through its hidden state. This is similar to providing a neural network in humans with short-term memory.

. It can be taken as an input meant for its prediction at the moment. We would be able to repeat this process meant for many arbitrary steps that allow the network to propagate the data through its hidden state. This is similar to providing a neural network in humans with short-term memory.

The time-series data such as stock price changes along with different character sequences similar to the character stream that you type on your mobile phone are a few examples. RNN would suit the applications involving a data sequence well when it’s changing over time. A few applications of this deep learning model would involve speech recognition, natural language processing, image captioning, visual Q&A, language translation and conversation modelling. Neural network is an area that is widely popular owing to its applications that can change the course of this world. Recurrent Neural Network.

Get to know more on Generative Adversarial Networks:

The Generative Adversarial Network(GAN) is a mixture of two deep learning neural networks: a Generator Network along with Discriminator Network. The process would involve the Generator Network producing synthetic data along with the Discriminator Network trying to detect the reality or synthetic nature of the data. Both the networks are adversaries in such a way that they compete with each other to beat the other. The Generator would try to produce synthetic data that is the clone of real data. The discriminator aims to become smart by detecting the possibility of any fake data. This deep learning model is more suitable for image detector, text generation, new drug discovery and many other similar applications.

Speaking about Deep Reinforcement Learning:

Deep learning techniques are on the rage. Reinforcement learning has found a common place among them. Reinforcement learning is going to involve an agent who would interact with the environment. The reinforcement agent would achieve what he focussed to a certain extent. The agent can observe the environmental state. The agent can learn the interaction method with each environment allowing them to achieve their goals.

Deep reinforcement learning would allow you to train different deep neural networks. Some of the applications of Deep Reinforcement Learning would be card games such as poker, chess, autonomous drones, robotics, retail industry tasks like resource allocation, inventory management, and finance-related tasks like portfolio design and self-driving cars. With the advent of this methodology, you are going to get the maximum out of Deep learning.

Let’s talk about Learning Rate Decay:

This is one of the most commonly known deep learning techniques. When you adapt the learning rate meant for your stochastic gradient descent optimization, then it would lead to more increased performance. This is also known as adaptive learning rates or learning rate annealing. You can leverage this model to reduce the learning rate over a specified time.

ResNet:

We use Residual Neural Network or ResNet to improve the pace of network training when it comes to deeper aspects of neural networks for deep learning. ResNet would work well when you want to break down deeper neural networks into smaller network portions. They would be connected via shortcut connections or skip when you want to form a better connection.

Continuous bag of models:

Continuous bag of words is nothing but a simplified change made on WordtoVec. The model present in the continuous bag of models would try to predict the particular word from the required context where you represent the context in the form of the number of words. You can use this method to improve natural language processing tasks like dependency parsing, part of speech tagging and much more.

To sum it up:

From image recognition to automated drones, there are not many areas that Deep Learning has not foreshadowed yet. It is a wide and broad field. To get the best kick out of it, ensure that you use the most popular Deep learning techniques prevalent in the market. Remember the above mentioned deep learning techniques to get the best benefits out of your deep learning pursuits.

Delve deeper into Deep Learning with Pattem Digital

At Pattem Digital, we are dedicated to providing comprehensive guidance on neural networks for deep learning and various deep learning methods. Our goal is to propel your AI journey forward. With our deep learning consulting company, you can quickly learn and harness different technologies. We have been an integral part of top-notch Deep Learning engineering teams, and we bring this expertise to the table. Engage in a conversation with us to determine the deep learning methods that best align with your application. As a deep learning service provider, we adhere to industry best practices, guaranteeing quality outcomes.

Let us join forces to make a significant impact on the Deep Learning market. Share your requirements with us, and we will implement the best strategies to elevate your business.