What is Hadoop?

Apache Hadoop is nothing but a 100% open-source framework. It has started off a new way to distribute processing a large amount of enterprise data sets. You need to rely upon expensive and different kinds of data systems when you

want to process and store the data. With Hadoop, you get distributed parallel processing of larger data sets across a number of industry-oriented, less costly services. This can store and process any kind of data. When you have Big Data Hadoop by your side, you should realize that your data is not as big as it seems.

The architecture of Apache Hadoop

Apache Hadoop is nothing but a collection containing a number of open-source software utilities. They are going to facilitate with the help of a network containing a number of computers.  This can solve a number of problems that involves a large amount of computation and data. This is going to provide your software framework with a distributed processing and storage of big data with the aid of the MapReduce programming model. The framework of Apache Hadoop consists of Hadoop Common, Hadoop YARN, Hadoop Distributed File System, and Hadoop MapReduce. You make use of Hadoop Common to leverage all the utilities and libraries that other Hadoop modules are going to require. The distributed file system storing your data on commodity machines would provide a very high level of aggregate bandwidth across various clusters. Hadoop YARN consists of a resource-management platform that has been responsible to manage all your computing resources in the form of clusters. You can use them to schedule all your users’ apps. Hadoop MapReduce is nothing but a programming model to leverage large-scale data processes. When you are indulging Apache Hadoop for your processes, you need to make sure that you have the right team to support you.

This can solve a number of problems that involves a large amount of computation and data. This is going to provide your software framework with a distributed processing and storage of big data with the aid of the MapReduce programming model. The framework of Apache Hadoop consists of Hadoop Common, Hadoop YARN, Hadoop Distributed File System, and Hadoop MapReduce. You make use of Hadoop Common to leverage all the utilities and libraries that other Hadoop modules are going to require. The distributed file system storing your data on commodity machines would provide a very high level of aggregate bandwidth across various clusters. Hadoop YARN consists of a resource-management platform that has been responsible to manage all your computing resources in the form of clusters. You can use them to schedule all your users’ apps. Hadoop MapReduce is nothing but a programming model to leverage large-scale data processes. When you are indulging Apache Hadoop for your processes, you need to make sure that you have the right team to support you.

How to make use of the Big Data and Hadoop Architecture?

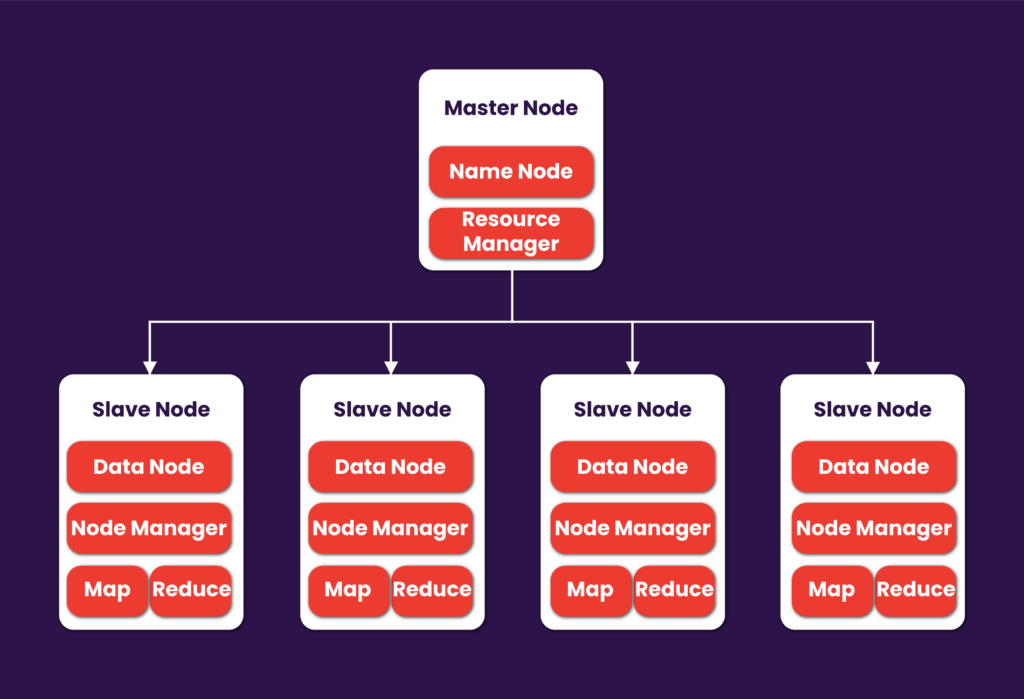

The Hadoop cluster is going to include a single master. But it is going to have a number of worker nodes. The master node holds your TaskTracker, JobTracker, and NameNode as well as the DataNode. Even though it might seem impossible to have just data-oriented worker nodes as well as compute-only worker nodes, you would get to work with a worker or slave node acting as the TaskTracker or the DataNode. While moving on to a larger cluster, you can manage your Hadoop Distributed File System (HDFS) through your dedicated NameNode server when you are all set to host your file system index along with a secondary NameNode capable of generating snapshots of your NameNode’s memory structures. You can prevent any file-system corruption as well as reduce data loss.

Unleashing Big Data Hadoop: Necessity in the Age of Information Overload

When you are part of a super-fast world that is connected with each other, you need to leverage more data that is regularly being created by various channels. The breakthrough pros of Hadoop mean that every organization and business is going to understand the value of the data that was in raw mode.  Organizations have started understanding how important it is to analyze and categorize data. Big Data Hadoop is very important when you want to store data. This can happen when you are going to add a number of servers to your Hadoop cluster. Each new server is going to add quite a lot of storage and processing power to every cluster. The data storage in Hadoop is going to be far better than any other platform. Each and every new server is going to add quite a lot of storage and processing power to its cluster. This is going to make Hadoop data storage far less expensive compared to other data storage methodologies. Big Data and Hadoop prove to be the best-ever combination. They have been in the market for a while but they have already provided to be a deadly combo! You get to manage your data like a pro with these two platforms. With an increase in the number of unstructured data growing at a rapid phase, you require Hadoop when you want to put up the right Big Data workloads. Hadoop’s cost-friendly, systematic, and scalable solutions would make it the most needed solution for any organization when they want to manage and process Big Data.

Organizations have started understanding how important it is to analyze and categorize data. Big Data Hadoop is very important when you want to store data. This can happen when you are going to add a number of servers to your Hadoop cluster. Each new server is going to add quite a lot of storage and processing power to every cluster. The data storage in Hadoop is going to be far better than any other platform. Each and every new server is going to add quite a lot of storage and processing power to its cluster. This is going to make Hadoop data storage far less expensive compared to other data storage methodologies. Big Data and Hadoop prove to be the best-ever combination. They have been in the market for a while but they have already provided to be a deadly combo! You get to manage your data like a pro with these two platforms. With an increase in the number of unstructured data growing at a rapid phase, you require Hadoop when you want to put up the right Big Data workloads. Hadoop’s cost-friendly, systematic, and scalable solutions would make it the most needed solution for any organization when they want to manage and process Big Data.

The predictions of Forrester state that enterprises adopting Hadoop are going to become compulsory. When some companies struggle a lot with the kind of Hadoop projects they handle, it is very important for businesses to take advantage of the facilities that have been provided to them. With advantage and flexibility being the most wanted advantage of choosing Hadoop, businesses are finding it simple to make the best use of this platform.

1. Marks and Spencer

Marks and Spencer made use of Big Data to get details on a number of sources to make the best decision in 2015. Their goal was to understand the behavior of the customer in a better manner. Marks and Spencer made use of Hadoop when they wanted to plug various gaps in between campaign management as well as manage the loyalty level of the customers. Now the company can predict which stock is in demand and make the right move.

2. Royal Mail

Hadoop has been used by the British postal service-based company “Royal Mail”. This goes on with the motivation to build your big data strategy and gain value from the internal data. Hadoop analytics tools were used to transform the type of data it was managing across a number of organizations. Now Royal Mail is capable of identifying customers in different industries, thus paving way for you to handle your sales and marketing teams. This can let you make more proactive decisions and steps. It can also integrate tech with business decisions.

3. Royal Bank of Scotland

Royal Bank of Scotland has been using Hadoop (Cloudera Enterprise) when they wanted to gain intelligence from their online chat conversations with the customers. With the help of big data analytics and management that has been built on Hadoop, businesses can leverage machine learning along with data wrangling when they want to understand and map their customer journey.

Pattem Digital: Your Trusted Partner in Fulfilling Your Big Data Needs and Beyond

When you are a part of Pattem Digital, you get an opportunity to work with different Big Data oriented projects. Our team can handle any of your requirements like a professional. With our Hadoop development company, you are all set to build the best product with Pattem Digital by your side since we provide 70+ services including Big Data Hadoop. What’s more? Let us know what your requirements are. We assure you that we will provide support from documentation to maintenance.